Prophet Arena Team

August 01, 2025

Prophet Arena: A Live Benchmark for Predictive Intelligence

Introduction

Forecasting is one of humanity’s most original and most powerful intellectual pursuits — the spark that gave rise to science, and the engine behind modern economics and finance. While today’s AI models can ace bar exams and outperform humans in math competitions, a deeper question remains poorly understood: Can AI systems reliably predict the future by connecting the dots across existing real-world information?

Today, we’re excited to launch Prophet Arena — a benchmark that evaluates the predictive intelligence of AI systems through lively updated real-world forecasting tasks. Unlike any existing benchmark, Prophet Arena is the ONLY benchmark that has all the following key properties:

-

It tests models’ forecasting capability, a high form of intelligence that demands a broad range of capabilities, including understanding existing information and news sources, reasoning under uncertainty, and making time-sensitive predictions about unfolding events. Most existing benchmarks are testing some particular capabilities with known answers at the time of test.

-

It is designed for human-AI collaboration, with a seamless implementation to allow human to supply useful news sources to augment AI systems’ predication. In turn, AI will generate insights for humans. Through such collaborative interactions, we hope to use AI as a scalable news source aggregation and comprehension of the market’s information.

-

It can never be overfitted because Prophet Arena uses lively updated real-world forecasting events and such future events by definition are always fresh tests.

-

It elicits AI systems’ probabilistic prediction of events and makes real-world betting decisions accordingly. Hence, strong performance on some metrics (e.g., the Average Return metric) translates directly to positive betting gain in the real world. As a subtle and perhaps counterintuitive caveat, we remark that high average market return does not necessarily align with high prediction accuracy (see our detailed ranking blog for explanations).

Forecasting as the Next Frontier of AI

Forecasting has long been a central task in machine learning — from predicting price movement to modeling the weather. Decades of research have also produced rich theoretical tools for these domains, such as time-series modeling, online learning, and conformal prediction. So what’s new and what makes the challenge this time different?

This new frontier now challenges us to build general-purpose AI systems that make accurate forecasts across a wide range of domains — potentially without domain-specific fine-tuning or access to specialized datasets. This kind of open-domain forecasting requires combined capabilities that today’s AI systems are only beginning to demonstrate:

-

Probabilistic reasoning — uncertainty quantification, calibration, and statistical thinking.

-

Causality — causal reasoning and modeling of how events unfold and influence one another.

-

Critical thinking — curating relevant information and assessing the credibility of sources before drawing conclusions.

These skills are fundamental to how analysts, investors, policymakers, and scientists reason about the world. A better forecast isn’t just a better guess — it creates real-world value, from improving market efficiency to informing high-stakes decisions.

And yet, most AI benchmarks today are retrospective: they test a model’s ability to recall facts, retrieve existing information, or solve problems with fixed answers. But true intelligence isn’t just about remembering the past — it’s about understanding the present and anticipating what comes next; The Prophet Arena evaluates AI systems on this front.

A Natural Solution to Benchmark Contamination

Notably, forecasting also solves a persistent problem in AI evaluation – test set contamination. When we measure model performance on fixed datasets, we cannot be sure whether a model has truly learned to solve the problem — or simply memorized answers seen during training. Forecast-based benchmarks sidestep this concern entirely. You can’t leak tomorrow’s news — because it hasn’t happened yet.

By anchoring evaluations in unresolved, real-world events, Prophet Arena ensures a level playing field. There is no pretraining advantage, no secret fine-tuning trick, no leakage of test samples.[[ref]]

Prophet Arena Design

Prophet Arena is designed to answer a simple yet fundamental question:

How well can AI models predict the outcomes of real-world, unresolved events?

Today’s prediction markets already provide an ideal foundation for this challenge — they offer a rich supply of well-structured events with clearly defined outcomes, along with a competitive baseline of aggregated human predictions. Prophet Arena curates events from platforms such as Kalshi, using the following selection criteria:

-

Popular — Events with strong indicators of public interest, such as high trade volume, liquidity, or volatility.

-

Diverse — A balanced mix across domains including politics, economics, science, sports, and entertainment.

-

Recurring — Repeated event formats (e.g., weekly price movement, earnings announcements) to support consistency and comparability.

To standardize the evaluation of forecasting tasks, Prophet Arena follows a structured pipeline consisting of three key stages:

-

Information Sourcing — For each prediction event, we deploy AI models with search capabilities to gather relevant news reports and organize them into a curated context. This serves as the input for downstream forecasting. We also capture snapshots of the associated market activity — including trading prices and contract volumes — to incorporate market consensus as part of the prediction context.

-

Prediction Submission — Given the same context, each AI model submits a structured forecast: a probability distribution over all possible outcomes, accompanied by a detailed rationale. These rationales are made visible to users, who can assess their value, share feedback on the usefulness of news sources, and contribute alternative information to observe how forecasts shift in response.

-

Outcome Resolution & Evaluation — Steps 1 and 2 are repeated for each event over time, until the outcome is realized. To prevent hindsight bias, all forecasts must be submitted before the outcome is known (or each AI model’s knowledge cutoff). Events are resolved according to the underlying prediction market. Once resolved, model predictions are evaluated under different metrics for its statistical accuracy and decision quality (see our discussion below). A live leaderboard tracks and aggregates model performance across time, domains under different scoring metrics, providing a transparent and evolving view of forecasting capability.

Evaluating Forecasts of Uncertainty

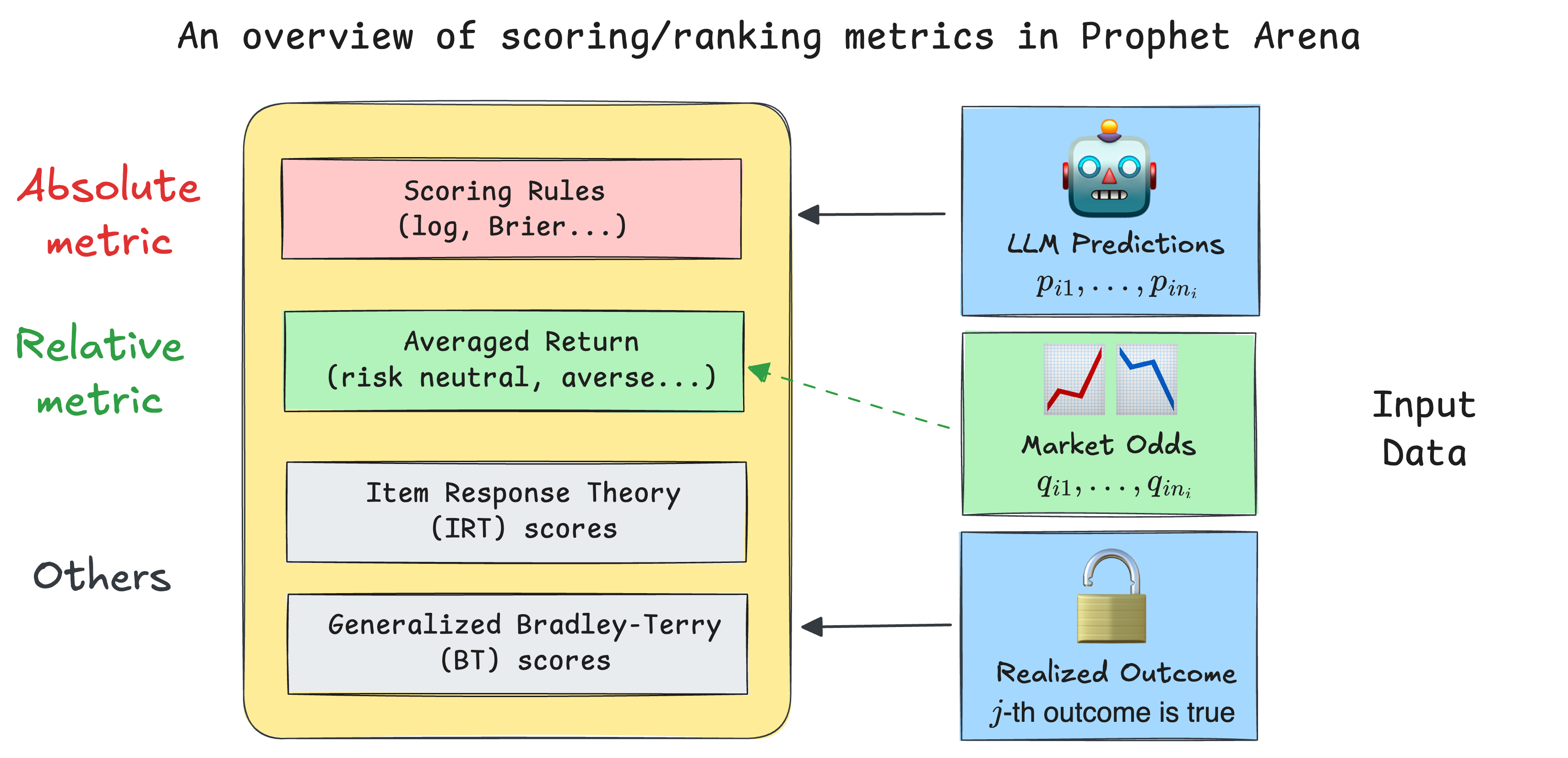

Prophet Arena uses a comprehensive evaluation framework tailored specifically for probabilistic forecasting tasks, designed to satisfy the diverse interests of both LLM developers and real-world practitioners interested in using LLM for important decision-making. Our leaderboard mainly employs two complementary categories of metrics:

- Absolute Metrics (Scoring Rules):

We primarily utilize the Brier score, a widely-adopted proper scoring rule that measures how accurately and confidently AI models predict probabilistic outcomes. Crucially, these metrics capture both prediction accuracy and calibration—ensuring the forecasts are reliably reflective of real-world uncertainties. This absolute scoring system directly evaluates the LLM’s intrinsic reasoning ability, independent of external factors like market consensus. - Relative Metrics (Averaged Return):

To bridge predictions with real-world actionability, we also introduce an innovative class of averaged return metrics, derived from utility theory. These metrics simulate a scenario where practitioners consistently use AI-generated probabilities to inform their betting decisions in real prediction markets. Users can flexibly adjust risk preferences to explore various betting strategies, offering a practical insight into the economic value generated by LLM-driven forecasts.

In addition to these core metrics, Prophet Arena incorporates advanced evaluation methods inspired by statistical and psychometric modeling—like Item Response Theory (IRT) and the Generalized Bradley–Terry (BT) model—to provide deeper insights into model performance. By weighting events according to their informativeness or accounting for the relative strengths of different LLMs, these complementary metrics enrich our leaderboard, providing a more nuanced and comprehensive understanding of predictive intelligence.

For a deeper dive into our scoring methodology and the reasoning behind our metric design, please visit our detailed scoring and ranking blog post.

What is Our Benchmark Designed for?

Forecasting is gaining traction in recent benchmarks such as MIRAI, RealityBench, FutureBench, and ForecastBench [[ref]]. Similar to our approach, many of these benchmarks source their evaluation tasks from prediction markets and provide contextual signals — including market prices, news articles, and public sentiment — to support model forecasting. At the same time, platforms like Kalshi and Polymarket are beginning to integrate AI models directly into their ecosystems, using them to generate market summaries that help humans make more informed predictions. Prophet Arena shares this foundational vision but introduces several important differences that set it apart:

- Prophet Arena elicits and evaluates statistical forecasts from AIs.

Unlike many benchmarks that require a single (most probable) outcome, we ask AI models to produce probabilistic distributions over possible outcomes. This design is motivated by three core reasons:

-

Richer expression space — Probability distributions allow models to express their confidence levels and uncertainty, not just make hard classifications.

-

Fine-grained evaluation — This enables the use of proper scoring rules (e.g., Brier score) to assess both accuracy and calibration.

-

Actionable predictions — Distributional outputs are more informative and suitable for downstream decision-making tasks.

Despite this, it remains unclear whether current AI systems can produce well-calibrated statistical forecasts. Their internal reasoning processes are often opaque, and uncertainty estimates may be unreliable. While recent research [[ref]] has begun to address this, Prophet Arena provides a platform to evaluate such capabilities directly.

- Prophet Arena is focused on benchmarking general-purpose AIs:

Today’s AI benchmarks tend to fall into two camps: Those that evaluate general-purpose LLMs on diverse tasks (e.g., MMLU-Pro, GPQA Diamond, LM Arena), and those that evaluate agent-based workflows with specialized tools (e.g., FutureBench, SWE-Bench, GAIA, τ-bench).

Forecasting is a complex task that involves multi-step agentic workflows like information gathering, data collection, statistical reasoning and analysis. While we expect AI agents to master these sophisticated capabilities over time, Prophet Arena currently focuses on a core forecasting subtask: Given a prediction question and a curated set of contextual documents, can a general-purpose language model produce an accurate and well-calibrated forecast?

This design helps control for context quality and isolates the forecasting ability of the model. Still, as agents become more autonomous and capable, Prophet Arena is designed to scale, enabling evaluation of full-stack forecasting agents with end-to-end workflows.

- Prophet Arena is built to facilitate humans exchanging insights with AIs:

Prophet Arena aims to go beyond benchmarking to enable meaningful collaboration between humans and AI in forecasting. We design a frontend interface to facilitate interactions of such kinds. From human to AI, users can curate relevant information and highlight key signals to see how predictions from AI models would change accordingly. From AI to human, our interface helps users explore model rationales behind their event predictions to inform their own beliefs about the event outcomes. We also provide a transparent leaderboard that breaks down model performance across different domains and under evaluation metrics.

We expect this design to create a powerful feedback loop with meaningful real-world impact. Prophet Arena offers a unique window into how AI models perceive and reason about the world — and where their judgments diverge from human intuition. Over time, we envision AI systems becoming active participants in prediction markets, contributing to a more liquid, dynamic trading environment. By aligning human insight with AI-driven forecasts, we aim to enhance collective foresight and inform high-stake policy-making across our society.

Early Findings

Future Development and Outlooks

The current launch of Prophet Arena is just the first step, and we aim to build a platform that augments how we understand and anticipate the world with AI-powered insights.

To make event forecasting more interactive and accessible for human users. We are developing tools that allow users to engage directly with AI models by asking questions like “How likely is this to happen?” and receiving not only forecasts, but clear, interpretable explanations. Users will be able to examine model rationales, suggest alternative sources, and test how predictions change with new information. Over time, we plan to introduce incentive mechanisms that reward users for contributing high-quality context, evaluating AI outputs, and actively improving the forecasting process.

To support customizable workflows for advanced forecasting agents. While the current benchmark evaluates general-purpose language models on fixed prediction contexts, future iterations will support agents that retrieve information, collect and analyze data using statistical and machine learning methods, and construct forecasts through multi-step reasoning. This will allow for more autonomous, adaptive agents tailored to specific domains — and will enable us to evaluate not just prediction accuracy, but the quality and transparency of the decision-making process behind each forecast.

To build the foundation for an AI prophet. We view forecasting as a natural extension of language modeling: if next-word prediction minimizes perplexity in text, forecasting minimizes uncertainty in the real world — one event at a time. Prophet Arena aims to collect rich, time-stamped data on AI-generated forecasts and use it to advance research on building next-generation models with strong capabilities in probabilistic reasoning, uncertainty calibration, and real-world understanding.

We’re just getting started. If you’re working on forecasting models, agent pipelines, or human-AI interaction, we invite you to test your systems on Prophet Arena. Help us explore what it really means for AI to be intelligent — not just in language, but in foresight.

Reference

- Singh, Shivalika, et al. "The leaderboard illusion." arXiv preprint arXiv:2504.20879 (2025).

- Karger, Ezra, et al. "ForecastBench: A Dynamic Benchmark of AI Forecasting Capabilities." The Thirteenth International Conference on Learning Representations.

- Ye, Chenchen, et al. "Mirai: Evaluating LLM agents for event forecasting." arXiv preprint arXiv:2407.01231 (2024).

- “Evaluation of AI Agents at Samaya: Samaya AI.” Samaya AI RSS, 24 Mar. 2025, samaya.ai/blog/evaluation-of-agents-at-samaya/.

- “Back to the Future: Evaluating AI Agents on Predicting Future Events.” RSS, www.together.ai/blog/futurebench. Accessed 26 July 2025.

- Damani, Mehul, et al. "Beyond Binary Rewards: Training LMs to Reason About Their Uncertainty." arXiv preprint arXiv:2507.16806 (2025).